advanced AI is exciting, but incredibly dangerous in criminals' hands

- Written by Brendan Walker-Munro, Senior Research Fellow, The University of Queensland

The generative AI[1] industry will be worth about A$22 trillion by 2030[2], according to the CSIRO. These systems – of which ChatGPT is currently the best known – can[3] write essays and code, generate music and artwork, and have entire conversations. But what happens when they’re turned to illegal uses?

Last week, the streaming community was rocked by a headline[4] that links back to the misuse of generative AI. Popular Twitch streamer Atrioc issued an apology video, teary eyed, after being caught viewing pornography with the superimposed faces of other women streamers.

The “deepfake” technology needed to Photoshop a celebrity’s head on a porn actor’s body[5] has been around for a while, but recent advances have made it much harder to detect.

And that’s the tip of the iceberg. In the wrong hands, generative AI could do untold damage. There’s a lot we stand to lose, should laws and regulation fail to keep up.

The same tools used to make deepfake porn videos can be used to fake a US president’s speech. Credit: Buzzfeed.Read more: Text-to-audio generation is here. One of the next big AI disruptions could be in the music industry[6]

From controversy to outright crime

Last month, generative AI app Lensa came under fire[7] for allowing its system to create fully nude and hyper-sexualised images from users’ headshots. Controversially, it also whitened the skin of women of colour and made their features more European[8].

The backlash was swift. But what’s relatively overlooked is the vast potential to use artistic generative AI in scams. At the far end of the spectrum, there are reports of these tools being able to fake fingerprints and facial scans[9] (the method most of us use to lock our phones).

Criminals are quickly finding new ways to use generative AI to improve the frauds they already perpetrate. The lure of generative AI in scams comes from its ability to find patterns in large amounts of data[10].

Cybersecurity has seen a rise in “bad bots”: malicious automated programs that mimic human behaviour to conduct crime[11]. Generative AI will make these even more sophisticated and difficult to detect.

Ever received a scam text[12] from the “tax office” claiming you had a refund waiting[13]? Or maybe you got a call claiming a warrant was out for your arrest[14]?

In such scams, generative AI could be used to improve the quality of the texts or emails[15], making them much more believable. For example, in recent years we’ve seen AI systems being used to[16] impersonate important figures in “voice spoofing” attacks.

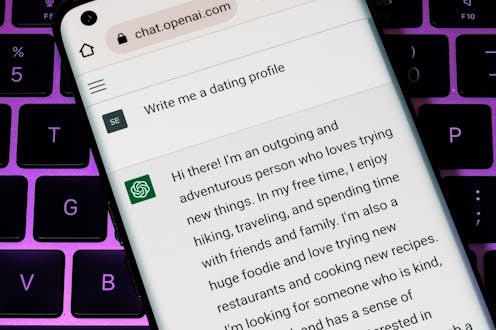

Then there are romance scams[17], where criminals pose as romantic interests and ask their targets for money to help them out of financial distress. These scams are already widespread and often lucrative. Training AI on actual messages between intimate partners could help create a scam chatbot that’s indistinguishable from a human[18].

Generative AI could also allow cybercriminals to more selectively target vulnerable people. For instance, training a system on information stolen from major companies, such as in the Optus or Medibank hacks last year, could help criminals target[19] elderly people, people with disabilities, or people in financial hardship.

Further, these systems can be used to improve computer code[20], which some cybersecurity experts say will make malware and viruses easier to create and harder to detect for antivirus software[21].

The technology is here, and we aren’t prepared

Australia’s[22] and New Zealand’s[23] governments have published frameworks relating to AI, but they aren’t binding rules. Both countries’ laws relating to privacy, transparency and freedom from discrimination aren’t up to the task, as far as AI’s impact is concerned. This puts us behind the rest of the world.

The US has had a legislated National Artificial Intelligence Initiative[24] in place since 2021. And since 2019 it has been illegal in California[25] for a bot to interact with users for commerce or electoral purposes without disclosing it’s not human.

The European Union is also well on the way to enacting the world’s first AI law[26]. The AI Act bans certain types of AI programs posing “unacceptable risk” – such as those used by China’s social credit system[27] – and imposes mandatory restrictions on “high risk” systems.

Although asking ChatGPT to break the law[28] results in warnings that “planning or carrying out a serious crime can lead to severe legal consequences”, the fact is there’s no requirement for these systems to have a “moral code” programmed into them[29].

There may be no limit to what they can be asked to do, and criminals will likely figure out workarounds for any rules intended to prevent their illegal use. Governments need to work closely with the cybersecurity industry to regulate generative AI without stifling innovation, such as by requiring ethical considerations[30] for AI programs.

The Australian government should use the upcoming Privacy Act review[31] to get ahead of potential threats from generative AI to our online identities. Meanwhile, New Zealand’s Privacy, Human Rights and Ethics Framework[32] is a positive step.

We also need to be more cautious as a society about believing what we see online, and remember that humans are traditionally bad[33] at being able to detect fraud.

Can you spot a scam?

As criminals add generative AI tools to their arsenal, spotting scams will only get trickier. The classic tips[34] will still apply. But beyond those, we’ll learn a lot from assessing the ways in which these tools fall short.

Generative AI is bad at critical reasoning and conveying emotion[35]. It can even be tricked into giving wrong answers[36]. Knowing when and why this happens could us help develop effective methods to catch cybercriminals using AI for extortion.

There are also tools being developed to detect AI[37] outputs from tools such as ChatGPT. These could go a long way towards preventing AI-based cybercrime if they prove to be effective.

Read more: Being bombarded with delivery and post office text scams? Here's why — and what can be done[38]

References

- ^ generative AI (www.mckinsey.com)

- ^ A$22 trillion by 2030 (www.csiro.au)

- ^ can (www.businessinsider.com)

- ^ by a headline (afkgaming.com)

- ^ porn actor’s body (www.bbc.com)

- ^ Text-to-audio generation is here. One of the next big AI disruptions could be in the music industry (theconversation.com)

- ^ Lensa came under fire (futurism.com)

- ^ more European (www.wired.com)

- ^ fake fingerprints and facial scans (fortune.com)

- ^ large amounts of data (www.abc.net.au)

- ^ to conduct crime (www.forbes.com)

- ^ a scam text (theconversation.com)

- ^ refund waiting (www.ato.gov.au)

- ^ out for your arrest (www.cyber.gov.au)

- ^ texts or emails (www.techtarget.com)

- ^ used to (www.wsj.com)

- ^ romance scams (www.accc.gov.au)

- ^ from a human (thediplomat.com)

- ^ could help criminals target (gilescrouch.medium.com)

- ^ improve computer code (www.zdnet.com)

- ^ to detect for antivirus software (www.techtarget.com)

- ^ Australia’s (www.industry.gov.au)

- ^ New Zealand’s (www.data.govt.nz)

- ^ National Artificial Intelligence Initiative (www.ai.gov)

- ^ illegal in California (leginfo.legislature.ca.gov)

- ^ first AI law (artificialintelligenceact.eu)

- ^ social credit system (www.abc.net.au)

- ^ to break the law (www.bleepingcomputer.com)

- ^ programmed into them (www.theregister.com)

- ^ requiring ethical considerations (www.lexisnexis.com.au)

- ^ Privacy Act review (www.ag.gov.au)

- ^ Framework (www.data.govt.nz)

- ^ are traditionally bad (www.apa.org)

- ^ classic tips (theconversation.com)

- ^ critical reasoning and conveying emotion (www.angmohdan.com)

- ^ giving wrong answers (www.reddit.com)

- ^ detect AI (www.techlearning.com)

- ^ Being bombarded with delivery and post office text scams? Here's why — and what can be done (theconversation.com)